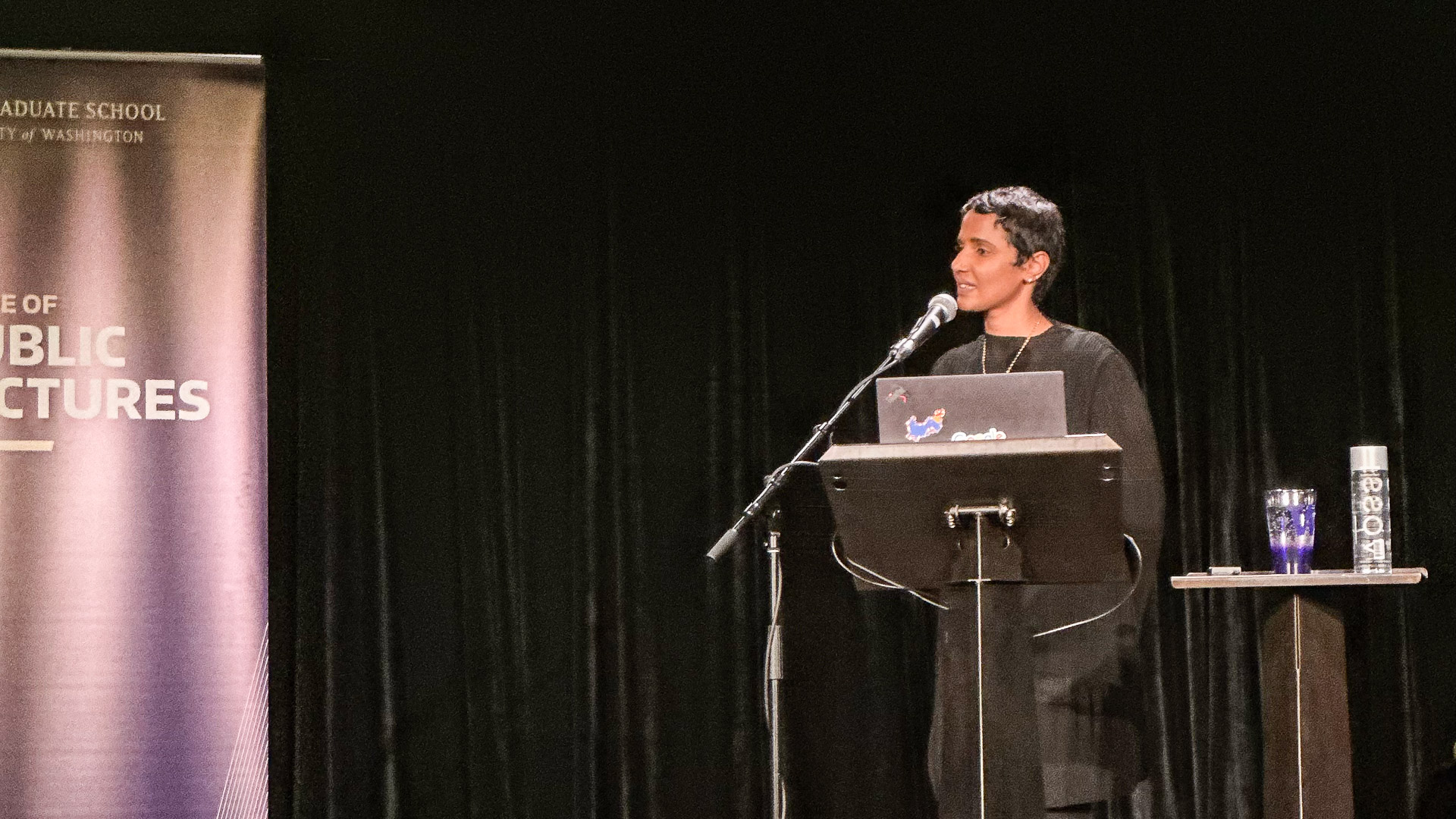

(Photo by Hanxiao Zhang)

On Tuesday evening Comm Lead community members gathered at Town Hall Seattle to listen to Arathi Sethumadhavan of Google discuss “Embracing a Human-Centric Approach to AI Development.”

We asked students and alumni for their reflections on the event, and how AI development can be done in a human-centered way. Here’s what they said:

Kyle Wickline

Arathi Sethumadhavan gave me hope about the possibility of ethical, human-centric AI development. This can be accomplished by actively involving stakeholders and end users throughout the process, maintaining continuous user research and feedback loops, collaborating with diverse domain experts to ensure accuracy and representation, prioritizing the needs of underrepresented communities, and establishing guidelines to integrate ethical practices. Sethumadhavan’s overarching goal to create AI with human needs as a priority, foster well-being, and uphold all individuals’ rights was inspiring.

Molly Spring

In my line of work, we have a saying: you are not your audience. This adage cautions us against making too many assumptions about how our solution will be received and encourages the participation of a broad group of stakeholders in our ideation processes.

When we consider the development of AI tools and technology, this becomes even more imperative. Dr. Sethumanhavan urges us to consider how diverse diversity is in our work: are we including as broad of an audience as possible? Have we challenged our own assumptions about how language is spoken, problems are solved, and experiences are interpreted? With the increased availability of low code or no code generative AI tools, it is also important to continue seeking feedback and input from affected stakeholders beyond deployment.

UW Comm Lead students gathered at Town Hall Seattle waiting for Arathi Sethumadhavan’s talk at on human-centered AI development to begin (Photo by Jean Wu)

Naomi Vettath

Dr. Sethumadavan called out several different ways AI can be developed in a human-centric way:

- Engage with the different types of stakeholders – technical experts (who might be impacted by this new AI tech), general public (consumers of AI), possible groups of people who benefit from it. Understand their concerns. Engage with them in a constant loop, not just at the beginning. Iterate with them in mind.

- Responsible Deployment – disclose information clearly, obtain informed consent (not a blanket consent)

- Engage with domain experts – it’s okay not to have all the answers; know who to collaborate with to find the answers.

- Question your assumptions.

- Advocate for underrepresented communities.

- When it comes to scaling – create best practices and community jury.

Trischa Schramm

The event underscored that human-centric AI development requires a commitment to advocating for diverse communities, engaging all stakeholders, and adhering to best practices for informed consent. It requires careful consideration of those who might be most impacted by a given technology, and works with them to address potential problems and concerns before deploying the technology at a broader scale. And it involves a deep engagement with end-users throughout the design process, ensuring that the technology is shaped by, and for, those it affects the most.

Lei Cai

Driven by the work of experts like Dr. Arathi Sethumadhavan, the focus on human-centric factors in AI development is increasingly prominent. This evolution is marked by greater involvement of diverse stakeholders (‘Human-in-the-Loop’), enhanced AI principles by industry leaders, and a rise in critical voices, all steering AI towards a more human-centered approach. Dr. Sethumadhavan’s insight, ‘It is ok to not have all the answers’, underscores the importance of continuous reflection in AI development, ensuring it aligns with human values and needs.

Kevin Laird

Developing AI in a human-centric way requires the voices of a diverse population of users and the informed consent of everyone who chooses to participate in the development of AI. It’s important to acknowledge AI as a tool and to fact-check everything it produces. One important aspect that Arathi mentioned is that we should incorporate responsibilities and objectives stated by tech companies such as Google or Microsoft into our own work when using AI. Is my use of this AI tool socially beneficial? Is it accountable to people? Is it reliable and safe? It’s critical that every user is mindful of these and other questions when using AI for good.

Gautam Malyala

Engaging users and stakeholders in a continuous feedback loop in every iteration of product development will play a key role in making AI more human-centric. The teams that are involved in building AI have a responsibility to incorporate such best practices into their workflow. Furthermore, enlisting knowledge from a diverse array of people, cultures, and languages will make the outcomes of human-centered AI design more effective and humane

Qi Su

After engaging with the event, I believe fostering a human-centric approach in AI development involves prioritizing ethical considerations and diverse perspectives. Emphasizing transparency, accountability, and inclusion throughout the development process ensures that AI technologies align with human values and needs, promoting responsible and beneficial applications in various domains.

Students listen to Arathi Sethumadhavan’s talk (Photo by Zhetong Li)

University of Washington

University of Washington